Scenario

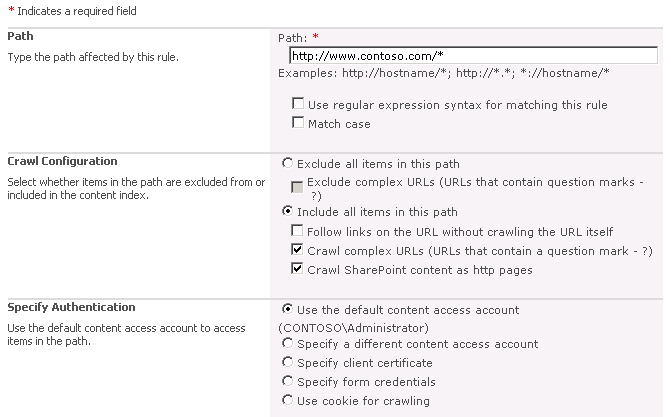

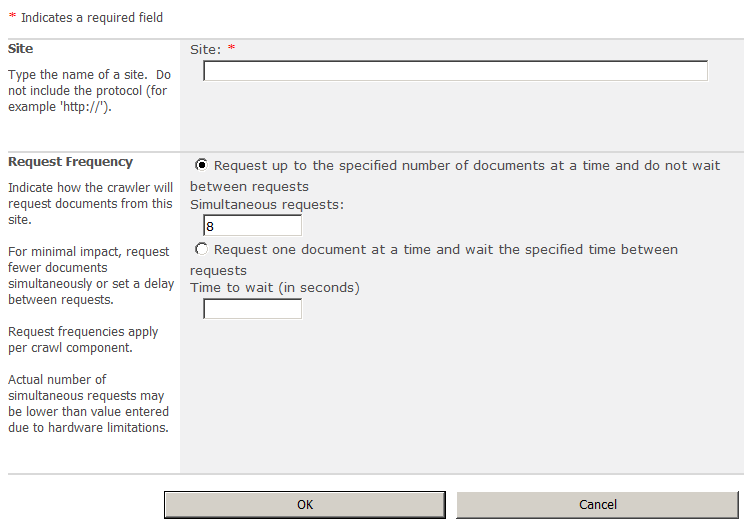

You have a SharePoint publishing site with a number of pages that display dynamic content based on a query string. You followed a process similar to Crawling Publishing Sites in SharePoint 2010 to configure SharePoint search to index the dynamic page content. Now you’d like to enrich the items in the search index with additional metadata that can be used for property restriction queries or for adding custom refiners.

Solution

Add dynamically generated META tag to the page. SharePoint will automatically create a crawled property of type Text under in the Web category using the name attribute of the META tag as the crawled property name. You can then map the crawled property to a new managed property that will get its value populated with the content attribute value of the META tag.

Example

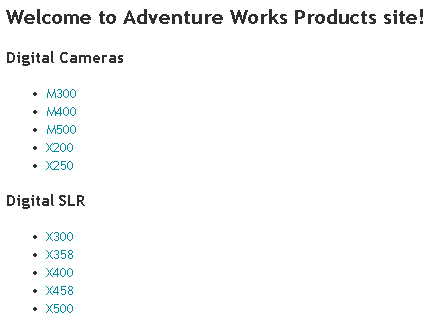

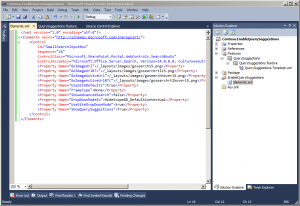

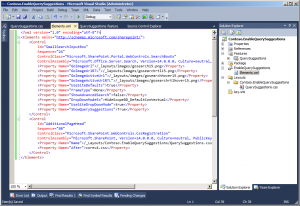

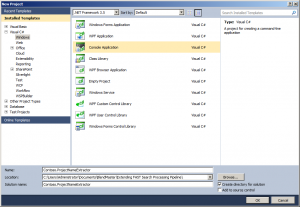

I’ll use the web part and pages created in my previous blog post and will simply extend the web part to generate a META tag.

[ToolboxItemAttribute(false)]

public class ProductInformation : WebPart

{

protected override void CreateChildControls()

{

// get the model number from query string

string modelNumber = Page.Request.QueryString["ModelNumber"];

if (!string.IsNullOrEmpty(modelNumber))

{

// assign a product category based on the model number

string productCategory = string.Empty;

switch (modelNumber)

{

case "M300":

case "M400":

case "M500":

case "X200":

case "X250":

productCategory = "Digital Camera";

break;

case "X300":

case "X358":

case "X400":

case "X458":

case "X500":

productCategory = "Digital SLR";

break;

}

// set the page title

ContentPlaceHolder contentPlaceHolder = (ContentPlaceHolder)Page.Master.FindControl("PlaceHolderPageTitle");

contentPlaceHolder.Controls.Clear();

contentPlaceHolder.Controls.Add(new LiteralControl() { Text = string.Format("{0} {1}", modelNumber, productCategory) });

// add the model number and product category to the page as an H2 heading

Controls.Add(new LiteralControl() { Text = string.Format("<h2>{0} {1}</h2>", modelNumber, productCategory) });

// generate a META tag

Page.Header.Controls.Add(new HtmlMeta() { Name = "modelnumber", Content = modelNumber });

}

}

}

If we refresh one of the product information pages after deploying the code change above, we should be able to see the META tag in the page source.

<meta name="modelnumber" content="M300" />

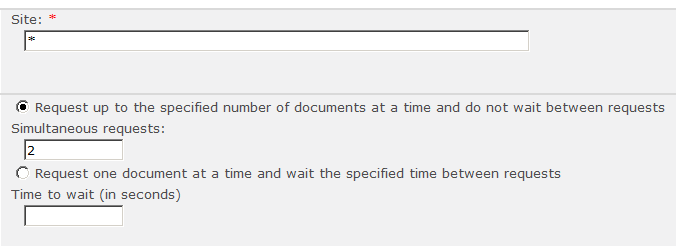

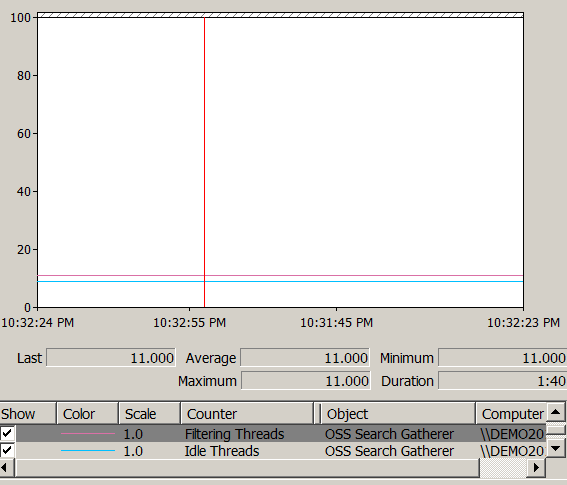

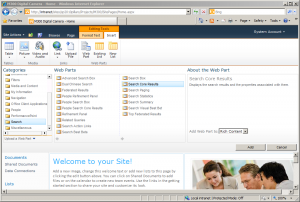

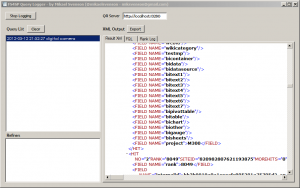

Now run a full crawl and then verify that the crawled property was created by going to Central Administration > Search Service Application > Metadata Properties > Crawled Properties (for SharePoint Search) or to Central Administration > Query SSA > FAST Search Administration > Crawled property categories > Web (for FAST Search).

Next, create a new managed property of type Text and add a mapping to the crawled property above. If using FAST Search, also check the Query property and Refiner property checkboxes.

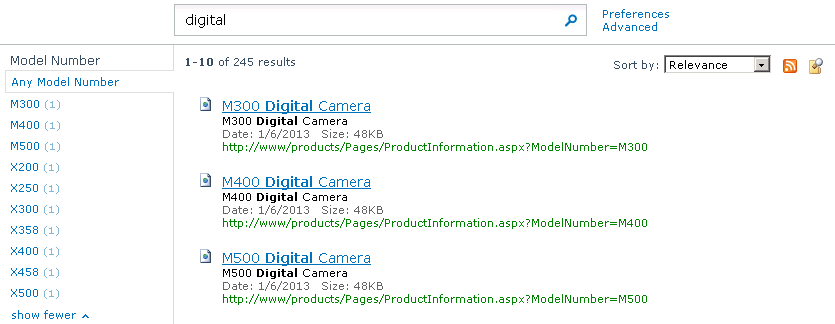

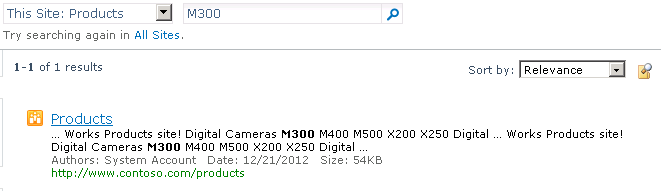

Run another full crawl and the managed property is now ready to be used for property restriction queries or as a refiner.

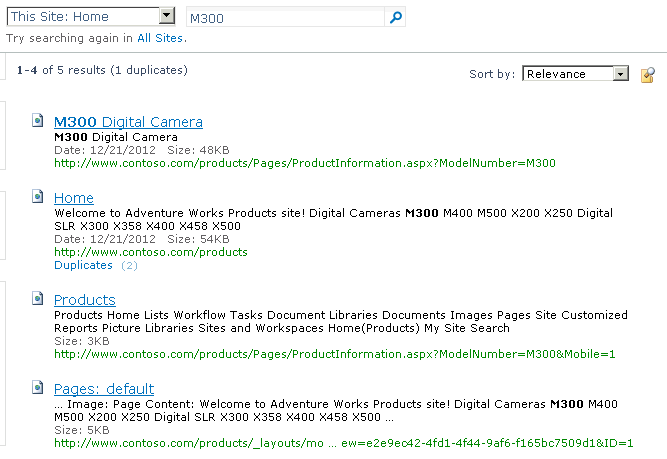

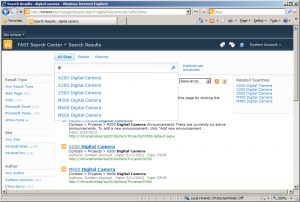

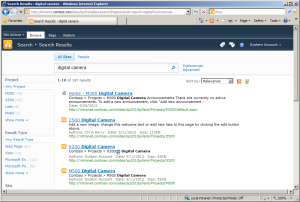

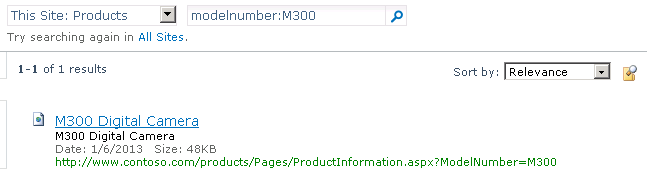

Let’s test it by running the following query first:

You can now also use the new managed property as a refiner.