Scalable and reliable real-time event processing has never been a simple task. Software architects and developers involved in such projects often spend the majority of their time focusing on building custom solutions to satisfy the reliability and performance requirements of the applications and significantly less time on the actual business logic implementation. The introduction of cloud services made it easier to scale real-time event processing applications but it still requires complex solutions to properly distribute the application load across multiple worker nodes. For example, EventProcessorHost class provides a robust way to process Event Hubs data in a thread-safe and multi-process safe manner but you are still responsible for hosting and managing the worker instances. Wouldn’t it be nice not having to worry about the event processing infrastructure and focus on the business logic instead?

Azure Stream Analytics service introduction

Azure Stream Analytics is a fully managed, scalable and highly available real-time event processing service capable of handling millions of events per second. It works exceptionally well in combination with Azure Event Hubs and enables the 2 main scenarios:

- Perform real-time data analytics and immediately detect and react to special conditions.

- Save event data to persistent storage for archival or further analysis.

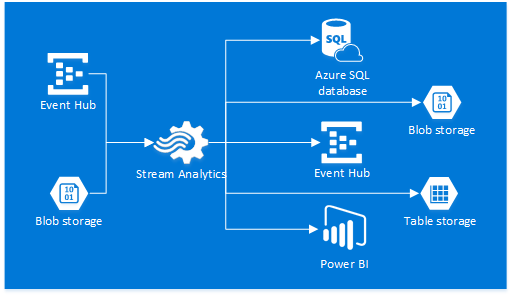

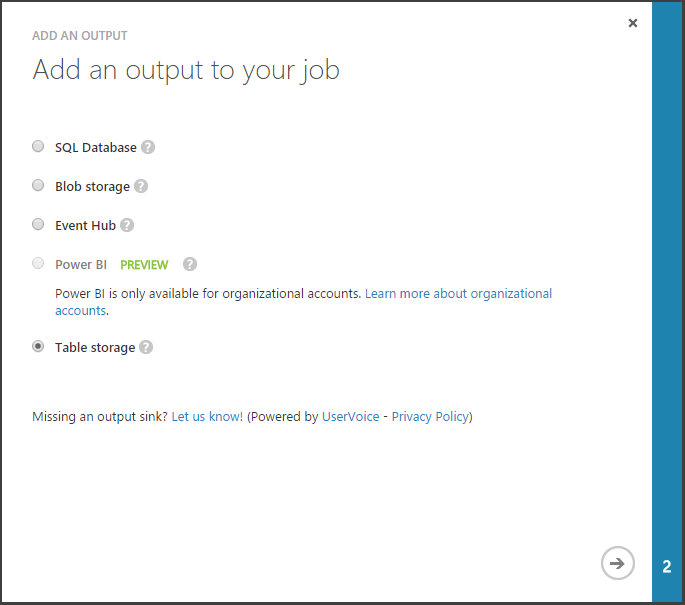

Each stream analytics job consists of one or more inputs, a query, and one or more outputs. At the time of writing the available input sources are Event Hubs and Blob storage, and the output sink options consist of SQL Database, Blob storage, Event Hubs, Power BI and Table storage. Pay special attention to the Power BI output option (currently in preview), which allows you to easily build real-time Power BI dashboards. The diagram below provides a graphical representation of the input and output options available.

The queries are built using a Stream Analytics Query Language – a SQL-like query language specifically designed for performing transformations and computations over streams of events.

Step-by-step Event Hub data archival to Table storage using Azure Stream Analytics

For this walkthrough, let’s assume that there’s an Event Hub called MyEventHub and it receives location data from connected devices in the following JSON format:

{ "DeviceId": "mwP2KNCY3h", "EpochTime": 1436752105, "Latitude": 41.881832, "Longitude": -87.623177 }

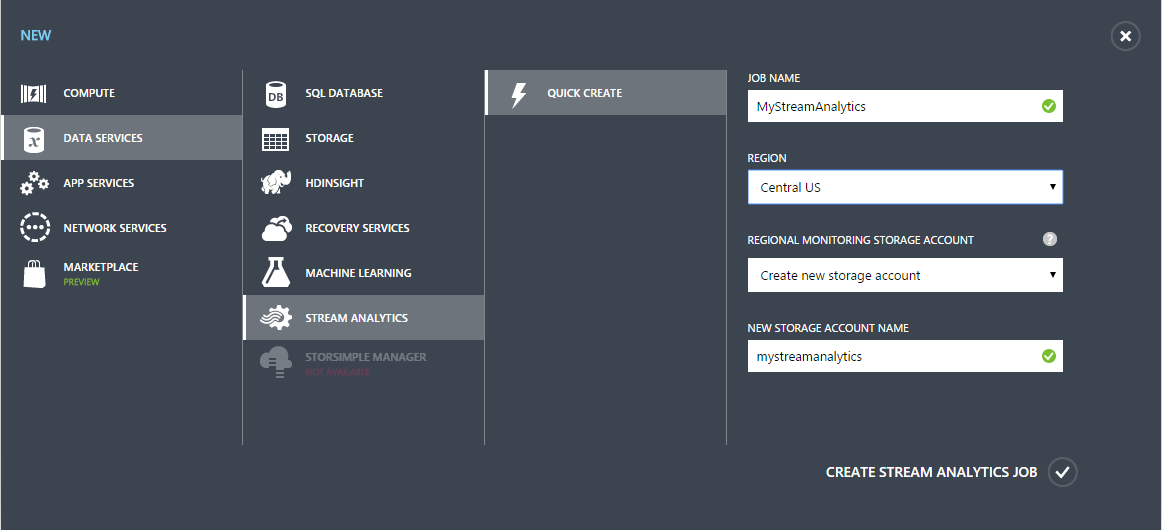

- Navigate to the Azure management portal and create a new Stream Analytics job.

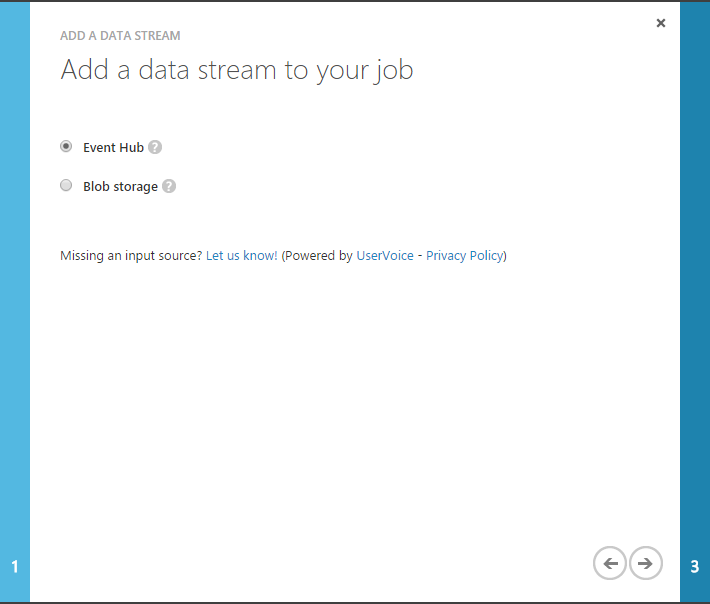

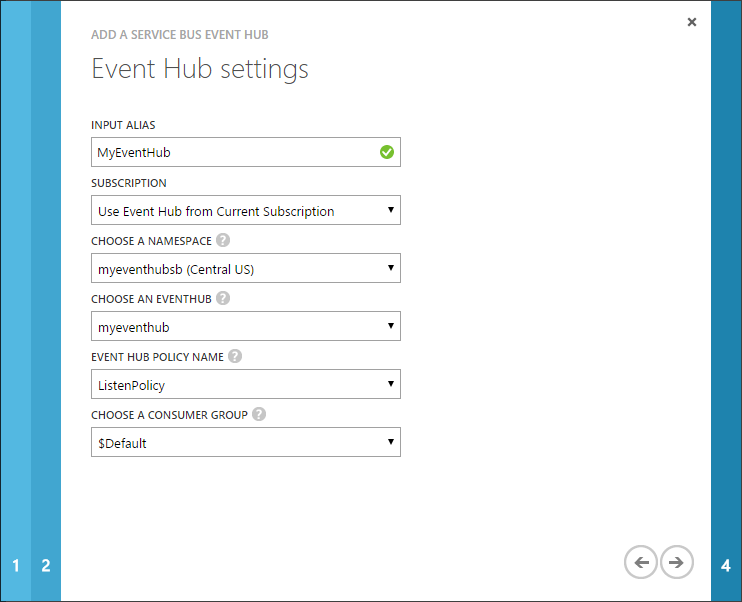

- Open the MyStreamAnalytics job and add a new Event Hub input.

- Configure the Event Hub settings.

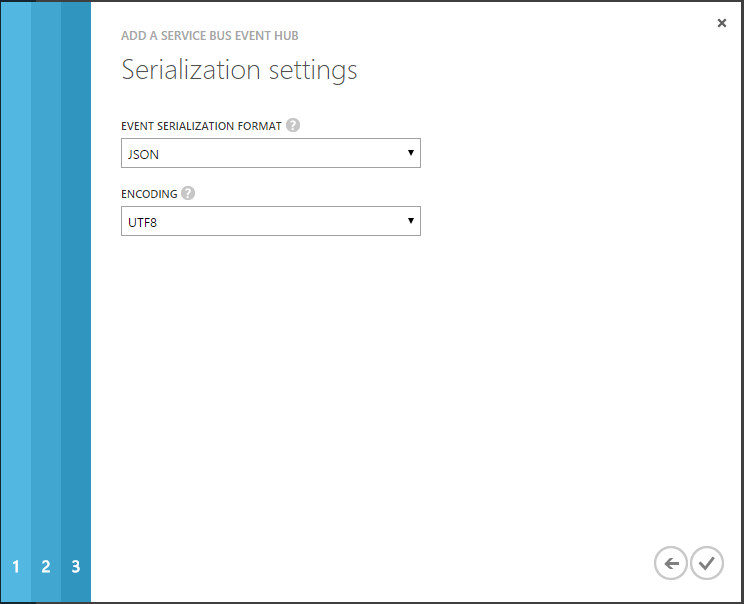

- Keep the default serialization settings.

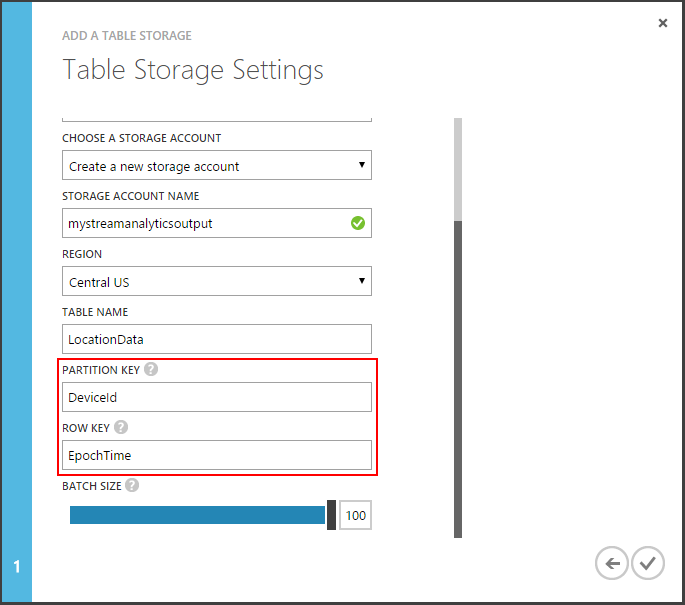

- Add a new Table storage output.

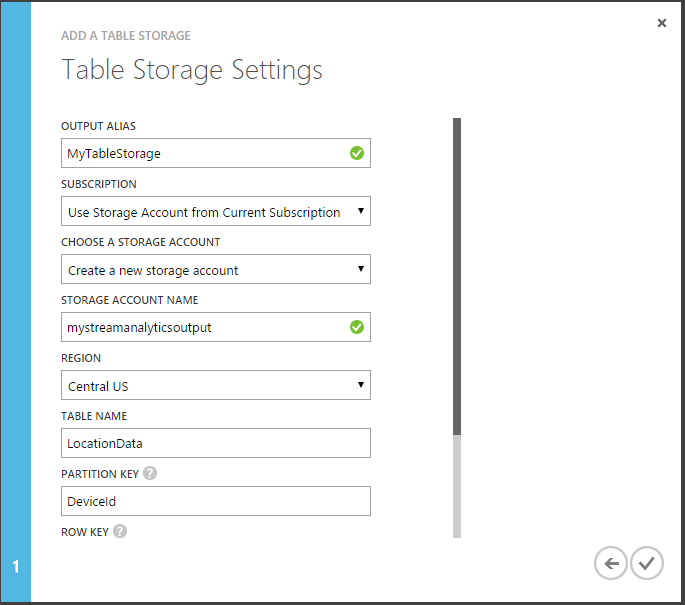

- Configure the Table storage settings.

- Use the DeviceId field as PartitionKey and EpochTime as Row Key.

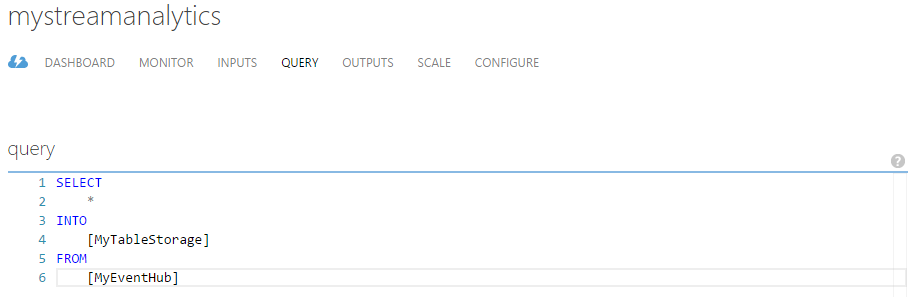

- Adjust the query to use the correct input and output alias names.

- Finally, start the job and watch your output storage table get populated with the Event Hub stream data without writing a single line of code!

Additional resources